Giving the Finger to the Bird

This is an expanded, written monologue of the Apotheosis podcast episode of the same name. Podcast music by Nullsleep.

You can blame Ben Brown for this one.

I mentioned Ben in the previous Apotheosis post. He's a current Microsoft engineer that works on the Bot Framework Composer, but made a name for himself in technology and the Austin tech scene for a variety of applications and companies, but most notably, his work on Botkit, which Microsoft subsequently purchased (I suspect this was an acqui-hire to gain Ben's services) and made a part of the Bot Framework. Ben and I are only a year apart in age. We both grew up with similar interests and managed to connect late in life over our work with chatbots (I'm still a Microsoft AI MVP for Bot Framework community contributions) and a love for Max Headroom.

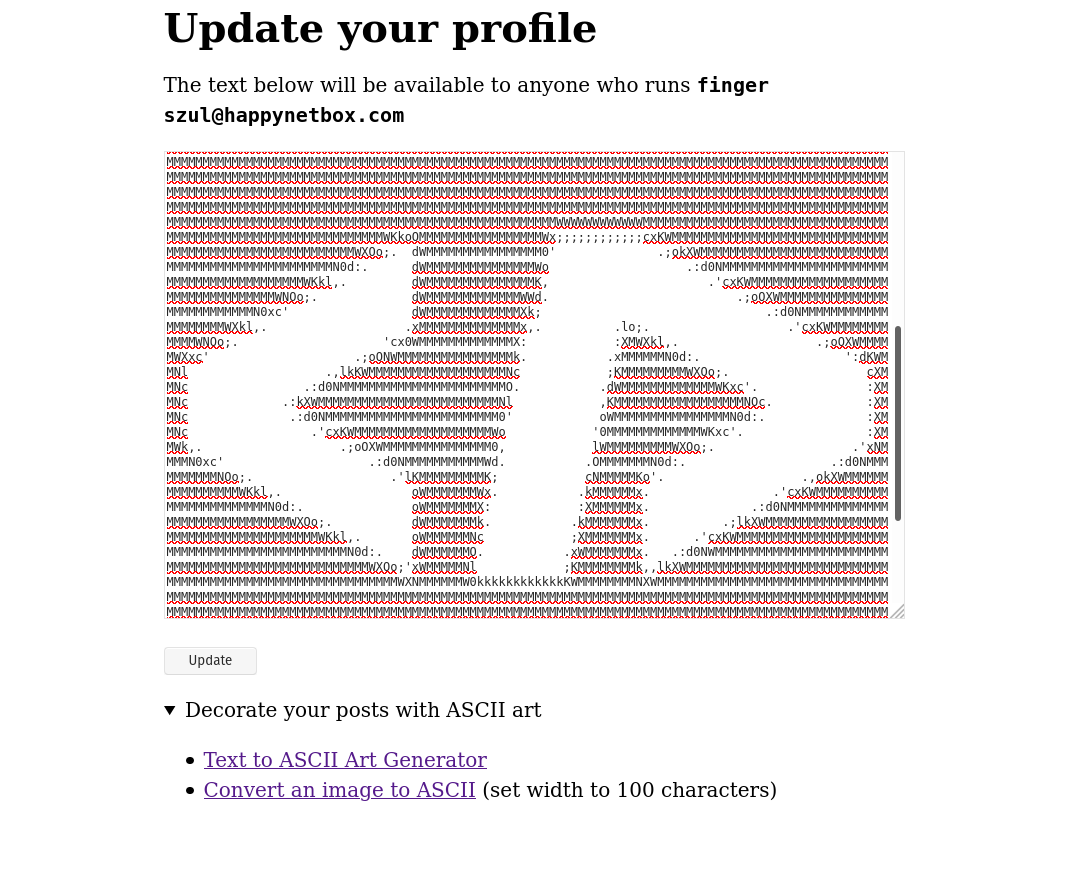

While I was busy putting the research together for a discussion on Gopher and Gemini, Ben was busy building out a version of the Finger protocol that limited the security risk and added a web interface for updating the text that would traditionally be in your .plan or .project file in a Linux/Unix home directory. Welcome to HappyNetBox!

We'll return to HappyNetBox in a bit because it's a good lesson in the history of social software, but if you weren't jacking into Unix machines in the 80's, you might be wondering what the Finger protocol is, or maybe you just need a quick refresher.

On Unix-based systems, prior to the advent of the finger command, information on logged in users was pretty much relegated to using the who command. On my current Linux laptop, this is the result:

szul :1 2021-04-30 06:27 (:1)

This doesn't really provide us with much other than who is logged into the system. In 1971, Les Earnest wrote the finger program to provide more relevant information. If I run the finger program on my computer, I get the following:

Login Name Tty Idle Login Time Office Office Phone

szul Michael Szul *:1 Apr 30 06:27 (:1)

If I finger the user directly, I get:

Login: szul Name: Michael Szul

Directory: /home/szul Shell: /usr/bin/zsh

On since Fri Apr 30 06:27 (EDT) on :1 from :1 (messages off)

No mail.

No Plan.

If I actually had information in a .plan file in my home directory, it would look like this:

Login: szul Name: Michael Szul

Directory: /home/szul Shell: /usr/bin/zsh

On since Fri May 7 06:27 (EDT) on :1 from :1 (messages off)

No mail.

Plan:

===============================

======== szul ================

===============================

Check out https://codepunk.io

I am in meetings until 4:30pm :(

The finger program is distinct—but related to—the finger protocol. The finger protocol is a daemon exposed to the network that typically runs on port 79. The finger program is a client for this protocol. When a finger request is made, it contacts the corresponding server or network device, which receives the request, processes the query, and responds. The connection between the client and protocol is then closed. This protocol and process was used to provide identifying information like with the examples I've shown. Typically it was meant for co-worker communication and information, hence the office phone. It can include other information like email address, name, logged on time, and through the .plan file could contain more verbose exposition on just about anything. Typically it was used for humor, but don't discount the fact that it was often used for regular status updates in the same manner as micro-blogging today.

The problem with finger was that as networks and the Internet grew the information supplied by the command could conceivably be used in social engineering attacks during the early days of hacking. As a result, it was soon decided to be a security risk.

Of course, finger—or more accurately the finger daemon—had a starring role in the drama surrounding the infamous Morris Worm in 1988.

Robert Tappan Morris—whose father Robert Morris was a well-known cryptography who worked for Bell Labs and later the National Security Agency—began working on a computer worm while a graduate student at Cornell University. Morris claimed that his original intention was to bring attention to security weaknesses in computers and computer networks. The eventually named Morris Worm exploited multiple vulnerabilities in Unix-based machines, specifically the DEC VAX running BSD, and one of the targeted components was a weakness in the finger daemon (fingerd) causing a buffer overflow.

Once on a machine, the virus would check if a copy of it already existed, and if so, it wouldn't replicate; however, trying to potentially outsmart system administrators who may attempt to fake the presence check, Morris had the virus replicate 14% of the time regardless of if an infection was present. This created a runaway replication process with multiple infections on a single machine slowing the processing power to a crawl—not unsimilar to a denial of service attack, crashing many machines1.

As computer experts worked feverishly on a fix, the question of who was responsible became more urgent. Shortly after the attack, a dismayed programmer contacted two friends, admitting he’d launched the worm and despairing that it had spiraled dangerously out of control. He asked one friend to relay an anonymous message across the Internet on his behalf, with a brief apology and guidance for removing the program. Ironically, few received the message in time because the network had been so damaged by the worm.

Independently, the other friend made an anonymous call to The New York Times, which would soon splash news of the attack across its front pages. The friend told a reporter that he knew who built the program, saying it was meant as a harmless experiment and that its spread was the result of a programming error. In follow-up conversations with the reporter, the friend inadvertently referred to the worm’s author by his initials, RTM. Using that information, The Times soon confirmed and publicly reported that the culprit was a 23-year-old Cornell University graduate student named Robert Tappan Morris.

The aftermath of the Morris Worm suggested anywhere from a few thousand to 60,000 computers were infected but the damages were vague2.

The worm did not damage or destroy files, but it still packed a punch. Vital military and university functions slowed to a crawl. Emails were delayed for days. The network community labored to figure out how the worm worked and how to remove it. Some institutions wiped their systems; others disconnected their computers from the network for as long as a week. The exact damages were difficult to quantify, but estimates started at $100,000 and soared into the millions.

Morris became the first person ever convicted under the draconian Computer Fraud and Abuse Act. He was sentences to three years probation and was fined several thousand dollars.

I'm no fan of Clifford Stoll, but in writing about the Morris Worm, he was correct in his assessment that:

If all the systems on the Arpanet ran Berkeley Unix, the virus would have disabled all fifty thousand of them

Stoll was talking about the dangers of monoculture and how reliance on one specific technology or way of doing things could result in long-term and devasting damages when a major event occurs. Monoculture is often seen as a danger in agriculture where monoculture farming has resulted in reduce varieties in produce. Then a blight hits and wipes out the entire crop because it targets that particular variety. Bananas in particular are currently experiencing this issue.

In technology—and as discussed previously—monoculture has emerged in the browser wars, in desktop software, and in Internet protocols. I mentioned before:

[…] as e-commerce became the fundamental rallying cry of the Dot Com Boom, those attempting to make money off the Internet, didn't have time for competing protocols. The web served the need for design-oriented marketing, so all the investment went there. We lost the other protocols to the junk piles of academic experimentation and with that loss, prostrated ourselves at the feet of advertising and marketing.

Those that work in open source "reinventing the wheel" so to speak, are doing so out of rejection of the monoculture that surrounds us.

With HappyNetBox, Ben Brown has essentially reintroduced us to the original status message. We often forget that most things around us aren't new. They are just modern interpretations of older ideas, usually twisted so that marketing and advertising can get a dollar out of it. Don't believe me? First we had the finger protocol, but tell me, how different are status updates from the away messages of AOL Instant Messenger? AOL's Instant Messenger let you create custom away messages that could even include rich text, color, and formatting. During the early to mid-00's teenagers were using away messages to give status updates, detail drama, tell jokes, and over-communicate. But at this time Friendster was crattering, while MySpace was surging, and every advertising company around was trying to figure out how to turn these emerging "social networks" into revenue generating businesses.

And they did.

So maybe now, we should take a look at that monoculture again, and do something about it.